Top 7 AI detection tools compared: which one should you choose?

AI detectors promise clarity in a noisy landscape, yet results can mislead when used without context. If you review essays, manage a newsroom, or run content operations, you need tools that surface risk without turning guesswork into verdicts. This comparison focuses on real workflows rather than hype.

We examine seven options used in classrooms and editorial teams: StudyPro, Winston AI, GPTZero, Copyleaks, Originality AI, Grammarly, and QuillBot. You will learn what each does well, when it struggles, and where it fits. We also explain how detectors work at a high level, including perplexity signals, classifier ensembles, and emerging watermark ideas.

Because every score is probabilistic, you also get guidance on setting thresholds, requesting drafts, and documenting decisions. That process matters more than any single percentage. Use these sections to design a review path that is consistent, transparent, and fair. With the right mix of tooling and human judgment, you can protect integrity, reduce disputes, and keep authorship conversations collaborative instead of combative. Set expectations early and apply them with care.

StudyPro

What it is: StudyPro is an academic writing platform with an AI detector, plagiarism checker, paraphraser, and writer in one editor. It targets student and research workflows rather than general web content. The site advertises unlimited AI scans with a free account during the current rollout.

How it detects: the StudyPro AI detection tool is powered by multiple detection engines and retrained regularly. The platform positions detection as part of a step-by-step academic process, not a one-off scan.

Strengths: one workspace simplifies evidence gathering: assignment briefs, outlines, drafts, and detection reports live together. That reduces context loss between tools and helps with audit trails. Independent reviews highlight the convenience of running AI and plagiarism checks inside the same editor.

Watch-outs: as with all detectors, treat scores as indicators, not verdicts. The platform itself reminds users that results can contain errors.

Best for: instructors and students who need integrated checks next to the writing environment, with low friction and no per-scan cost.

Winston AI

What it is: Winston AI serves education and publishing teams with OCR, image detection, and PDF-style reports. Pricing is credits-based, which suits periodic audits and semester cycles. Accounts support shared access so instructors and reviewers can see the same results.

How it detects: the vendor claims very high accuracy on pure AI text across major model families. Results appear as document assessments with supporting context that points to likely AI segments.

Strengths: OCR is the difference maker for scanned printouts and PDFs, photographed pages, and handwritten work. Reports are easy to export and attach to case files or LMS submissions. Bulk processing and simple foldering help organise class sections or publication projects.

Watch-outs: accuracy figures are vendor-reported and can shift under paraphrasing or light edits. Thresholds should be tuned to your domain rather than copied from marketing pages. Publish an appeals path so students and authors can provide drafts and notes.

Best for: educators and compliance teams that prioritise OCR, clean reports, and straightforward review.

GPTZero

What it is: GPTZero is widely adopted in schools and universities with Canvas and Moodle integrations. Batch scanning and sentence-level highlighting make classroom review practical. A free tier makes initial adoption easy.

How it detects: classifiers are trained to separate human and AI writing, then applied at document and sentence levels. Highlighted passages give instructors concrete places to start conversations about authorship.

Strengths: LMS integrations reduce copy-paste friction and keep work inside existing workflows. Bulk processing supports department-wide checks at midterm and finals. The interface is clear, and flagged lines are easy to discuss during office hours.

Watch-outs: accuracy drops on mixed or heavily edited text, which is common in real assignments. Very formal human prose can appear machine-like if style norms are rigid. Require revision history or planning notes to add context before any decision.

Best for: schools that need in-platform scanning and sentence-level feedback for fair, documented reviews.

Copyleaks

What it is: Copyleaks offers AI detection and plagiarism scanning with APIs and LMS connectors. Coverage includes many languages, which helps international programs and global teams. Enterprise controls support roles, logs, and scalable deployment.

How it detects: the system uses model-agnostic features and updates detectors as new generators appear. AI Logic explanations add short rationales that help reviewers understand why a passage was flagged.

Strengths: multilingual detection reduces blind spots in diverse classrooms and cross-border teams. The API allows automated checks in CMS, LMS, or editorial pipelines. A sandbox helps engineers and admins validate behavior before rollout.

Watch-outs: treat accuracy claims as directional until you validate on your genres and rubrics. Very formal human writing can trigger flags, so pair results with human review and sources. Tune thresholds per department to avoid unnecessary escalations.

Best for: universities and enterprises that need multilingual coverage, an API, and audited workflows at scale.

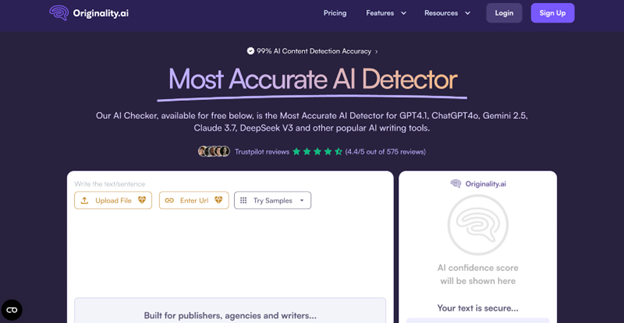

Originality AI

What it is: Originality AI targets publishers, SEO teams, and agencies with team controls and project reporting. A Chrome extension captures drafting evidence in Google Docs, which helps during authorship disputes. Two detector modes, Lite and Turbo, allow different balances of speed and sensitivity.

How it detects: classifiers are tuned for web content and updated against new model families. Benchmark posts and release notes explain coverage changes so teams can plan migrations.

Strengths: evidence capture supports audits when an editor needs to see the creation trail. Role-based access controls fit multi-writer environments with varying permissions. Scheduled scans and bulk URL checks streamline pre-publish reviews.

Watch-outs: vendor accuracy claims require local testing on your site’s styles and languages. Debates around bias and fairness continue, which means you need a reviewer protocol. Set written criteria for escalation so editors act consistently.

Best for: content leads and editors who need team controls, audit trails, and repeatable pre-publication checks.

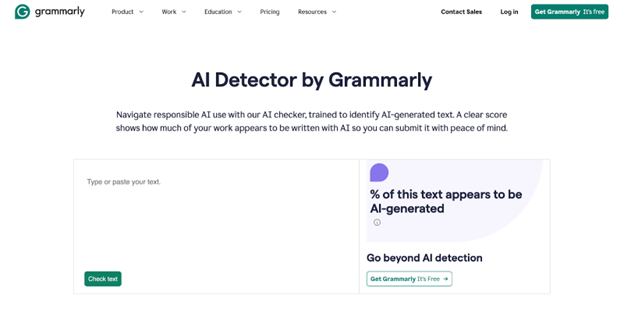

Grammarly

What it is: Grammarly includes a free AI detector inside its broader writing suite. The readout is simple and fast, which suits quick spot checks. Many writers already use the editor, so adoption needs little training.

How it detects: the detector leans toward conservative signals designed for triage rather than full investigations. It gives a basic likelihood indicator that prompts a follow-up when needed.

Strengths: zero-setup checks make it easy to screen drafts before deeper review. A familiar interface reduces friction for busy teams and classrooms. It works well as the first pass in an everyday workflow.

Watch-outs: sensitivity can vary across genres, and public benchmarking is limited. Do not treat a single percentage as grounds for action in high-stakes contexts. Escalate to a specialised detector and request drafts or sources.

Best for: writers and instructors who want quick screening inside a tool they already use.

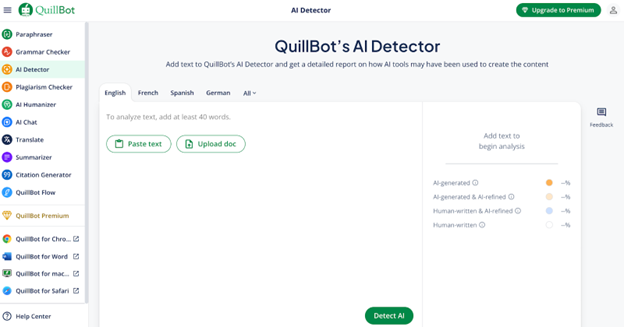

QuillBot

What it is: QuillBot’s AI Detector sits next to its paraphraser, grammar checker, and summariser. Bulk upload supports high-throughput screening across many files. Reports can be stored and shared within the same account that teams already use.

How it detects: the classifier is positioned as model-agnostic and provides both an overall score and local highlights. Reviewers can jump to likely AI zones rather than scanning blind.

Strengths: throughput is strong for editors processing many drafts in one session. The interface is consistent with QuillBot’s other tools, which keeps the learning curve short. It works well as a triage layer before deeper, policy-grade analysis.

Watch-outs: enterprise features are lighter than API-first vendors, which may limit automation plans. Paraphrased or lightly edited AI text can still slip through, so confirm important cases. Set internal guidance for thresholds, sampling, and escalation.

Best for: editors and instructors who batch-screen drafts and want quick, local highlights without heavy integration work.

How AI detectors actually work

Most detectors estimate how likely a passage is to come from a language model. They combine probabilistic signals, then map those signals to a confidence score. Thresholds vary by vendor and use case, which means two tools can disagree on the same paragraph.

Under the hood, today’s tools use combinations of:

- Perplexity Signals: machine-written text often has low surprise for the model that could have produced it. Research like DetectGPT formalised this with curvature tests over a model’s probability surface. These methods work best on longer, unedited passages and degrade when text is paraphrased, heavily revised, or style-matched to a specific author.

- Classifier ensembles: supervised models are trained on human and AI corpora, plus features like burstiness, sentence length, punctuation patterns, and syntactic markers. Vendors rarely disclose full training distributions, so domain drift and genre shifts matter. Performance also depends on language, topic, and chunk size, with short excerpts creating unstable predictions.

- Watermarks: during generation, models can embed detectable token patterns that later serve as provenance signals. Watermarks help when enabled and preserved, yet most public chat systems do not expose verifiable watermarks, and simple edits or translations can disrupt the pattern. Cryptographic provenance and signed outputs are being explored but remain uneven across tools.

Important context: OpenAI shut down its public text classifier in 2023 for low accuracy and encouraged a focus on provenance methods and multi-signal review rather than generic one-shot detectors. That guidance still shapes today’s best practice: pair detection scores with drafts, sources, and human judgment.

What can go wrong: limitations you must plan for

False positives on certain writing styles

Detectors often misclassify non-native English, very formal prose, and formulaic student writing. Simple syntax, limited vocabulary, or rigid structure can look “machine-like.” Use extra care in education and hiring, calibrate on local samples, and publish an appeals path.

Paraphrasing and light human editing reduce accuracy

Mixed passages and AI-edited human text are the hardest cases. Translation passes, paraphrase tools, or templated rewrites can scramble detector signals. Ask for drafts and version history, then compare reasoning, sources, and revision notes before deciding.

Scores are probabilistic

A “percent AI” is a confidence estimate, not a measurement of how much text a model wrote. Thresholds differ by vendor, and short excerpts create unstable predictions. Expect disagreements between tools and treat scores as leads that require corroboration.

Short or noisy inputs confuse models

Captions, lists, tables, code blocks, and OCR artifacts from scans degrade reliability. Clean the text when possible, segment long documents into coherent sections, and avoid judging on snippets alone.

The bottom line is that you should never rely on a single score. Combine tool output with drafts, sources, metadata, and subject-matter review, and document your thresholds and decision criteria so outcomes stay consistent and defensible.

Interpreting results without overreacting

- Treat each score as a lead: ask for revision history, notes, outlines, and sources. Review voice and reasoning. Confirm file metadata, timestamps, and version names. If possible, compare against earlier submissions from the same writer to check stylistic continuity

- Look for local spikes: sentence-level flags are more actionable than a single document-level percentage. Tools like GPTZero and QuillBot highlight specific lines. Read the surrounding context, then ask for a short rewrite of the flagged passage. Consistent improvement on request suggests genuine authorship

- Check risk factors: very formal prose from non-native writers can look “machine-like.” Apply extra diligence and offer a fair path to resolve concerns. Set minimum text lengths before judging. Segment by genre, since lab reports, abstracts, and product pages have rigid patterns that skew signals

- Expect evasions: humanisers and paraphrasers reduce detectability. Use a portfolio approach: drafts, style consistency, subject knowledge, and plagiarism checks. Run two detectors when the stakes are high. Document thresholds, escalation steps, and outcomes. Invite an explanation from the writer, record evidence, and keep an appeals process so decisions remain fair and repeatable

Tool-by-tool takeaways

StudyPro

StudyPro suits students and academics who want one workspace for writing and verification. It combines a paraphraser, AI detector, and plagiarism checker in a single academic workflow.

Winston AI

Winston AI excels at OCR and clear reporting for scanned or handwritten work. Exportable reports and shared accounts make classroom and team reviews efficient.

GPTZero

GPTZero fits schools that need seamless, in-platform checks. LMS integrations and sentence-level highlights support practical, classroom-focused reviews.

Copyleaks

Copyleaks is designed for scale with multilingual detection and a robust API. Universities and enterprises can run automated, auditable checks across large programmes.

Originality AI

Originality AI serves publishers and SEO teams that manage authorship at scale. Audit trails and team controls help editors verify provenance before publication.

Grammarly

Grammarly’s detector offers quick, convenient first-pass screening inside a familiar editor. It works well for writers and instructors who need zero-setup spot checks.

QuillBot

QuillBot helps editors and instructors screen many drafts in one session. Batch uploads and in-app highlights speed triage without complex technical setup.

Final guidance: choose with purpose

Detectors can help, but they are decision support, not decision makers. The most durable setup is a two-layer approach:

- A workflow-fit detector from the list above, chosen for your context

- A human review protocol with drafts, sources, and clear communication

Document how you use the tool, be transparent with students and authors, and avoid single-score judgments. The research consensus still warns against overreliance, especially in education, and even vendors agree that results need interpretation.

If you align the tool to your job, test on your own samples, and pair results with a thoughtful review. You will catch the problems that matter and avoid the harms that come from false certainty. Train reviewers, set escalation steps, and keep a written appeals path so outcomes remain fair, consistent, and defensible across courses, teams, and time. Update thresholds quarterly and revalidate on samples.