AI serves digital media based on user's real-time emotions

Founded by Matthew Mayes and Claudio Piovesana and launching at Unbound, A-dapt is the world’s first adaptive-media consultancy that has been created to commercialise and scale a new AI-based technology format called adaptive-media, within the UK and beyond.

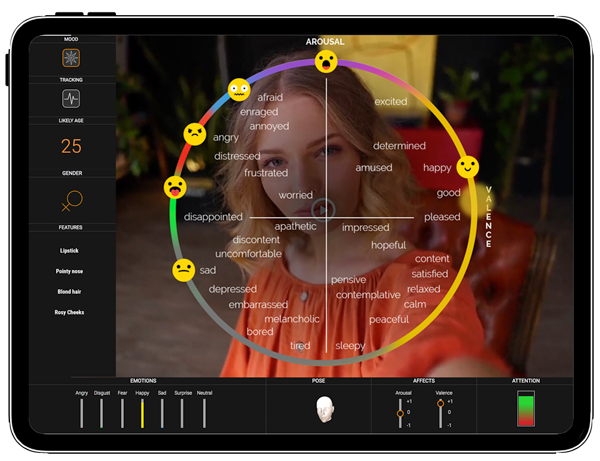

Adaptive-media is digital media that uses machine learning to adapt naturally to the viewer in real-time based upon how they appear and how they react, just as humans instinctively adapt to each other, and without sending personal data to a server. Adaptive-media has applications across marketing, education, health, compliance and many other sectors.

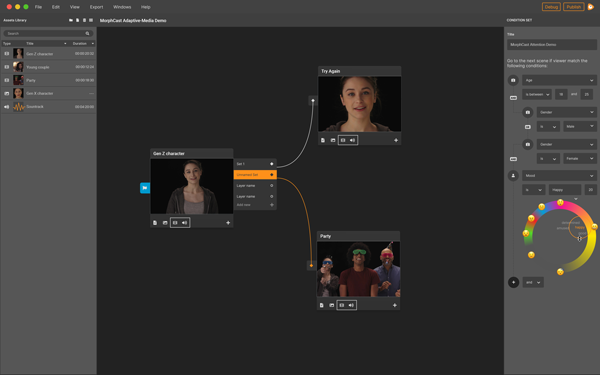

A-dapt licences technology built by an existing Italian based startup called MorphCast which was created by leading Internet innovator and pioneer Stefano Bargagni, who had previously created the world’s first e-commerce platform CHL, a full year before Amazon launched back in 1993.

Bargagni’s insight came when he saw his young daughters using their early iPhone 4s and realised that the device camera could be used for more than just filming and selfies and that face analysis could be used to adapt content to the user in real-time. He also realised that the processing capabilities of smartphones would increase exponentially allowing machine learning to take place on the device and within the browser, which means that personal and biometric data remains within the ‘sandbox’ of the browser and not sent to a server. As a result, his patented technology is fully GDPR compliant.

At the Cannes Lions International Festival of Advertising in June, the team launched a desktop application enabling agencies, brands and creators to create adaptive-media experiences that unlike traditional linear experiences, adapt based upon factors such as viewer emotions, demographics and - interestingly - attention.

Evolution of digital media

The evolution of digital media has come a long way in a short space of time, from VR (Virtual Reality) to AR (Augmented Reality) to MR (Mixed Reality) and image recognition, you’d wonder if there was anywhere else left to take the art form: introducing adaptive-media.

Adaptive-media is a new paradigm made possible by recent advances in machine learning, edge-based computing, device cameras and increased processing power combining to make this new format a reality. There are now 2.7 billion smartphones crying out for new non-linear digital formats.

The potential applications of adaptive-media

The fact that adaptive-media can branch and adjust based on who’s watching and how they react - this lends itself to a variety of applications. According to Mayes, the possible applications for adaptive-media are potentially limitless:

- “Retailers can create a Christmas video advert that plays in a conventional linear way, but then can adapt to the viewer, be that a child interested in toys, a parent looking for a turkey or grand-parent trying to find what are the must have toys.”

- “Fashion brands can gauge customers real-time emotional reaction to their collection and we can even select products based upon the positivity of the user expression.”

- “Charity adverts can actually reward the charity by seeing if the viewer has actually read the message, by making a token donation for a fully validated impression.”

- “We can even create a format for entertainment such as adaptive-media pop promos where the artist performance changes based upon the emotional reaction of the fan.”

Adaptive Media could revolutionise advertising, explained Mayes, “Sixty percent of mobile phone banner clicks are mistakes, the banner format is not well designed for the device. To make it worse, our personal data is being monetised. With adaptive media we will be able to capture the user’s attention with this conversational format which can be measured anonymously along with the advert impression for the brand.”

Mayes further explained that this new media form could turn advertising on its head, users could be awarded attention tokens to spend with brands after engaging with their content - thereby monetising the user’s attention to benefit themselves.

This type of media could fix the biggest barrier to remote learning as after 15 minutes of the viewer being in front of a screen when they start to lose attention, the media could alert them to this and suggest different activities to increase their attention.

Another use-case is in the health sector, for example, a patient goes in for a procedure, and the viewer can watch actors talk them through the process, and adjust their messaging if the patient displays signs of anxiety. Using emotion analysis the media can adapt to interact with the user in a way that creates a difference, “and hopefully more powerful way of capturing, measuring and rewarding attention,” said Mayes.

While video is good for storytelling, adaptive media is better for conversations as it adds an emotional engagement layer to content and stories.

A-dapt is currently looking for half a million pounds in investment over three years to commercialise and scale across various sectors.

Unbound London 2019

“There is a great mix of people and major brands who attend, and they have content of genuine interest and relevance. It’s an opportunity to come and talk about the technology - also as a startup finances are tight so it’s not possible to exhibit at a lot of events which act as a type of advertising, but Unbound London makes it possible.”